Hold onto your seats, tech enthusiasts! It’s time to explore the new emerging technology that makes your life easier and helps you to emphasize quality and quantity simultaneously.

CodeConductor is the first no-code platform that leverages the power of AI and RAG to simplify complex tasks.

Have you ever wondered how Google’s search engine or Siri’s voice assistant seems to know just what you’re looking for?

The secret sauce is often a blend of retrieval and generation, a dynamic duo in the AI world. But what happens when these two join forces? Enter Retrieval-Augmented Generation (RAG).

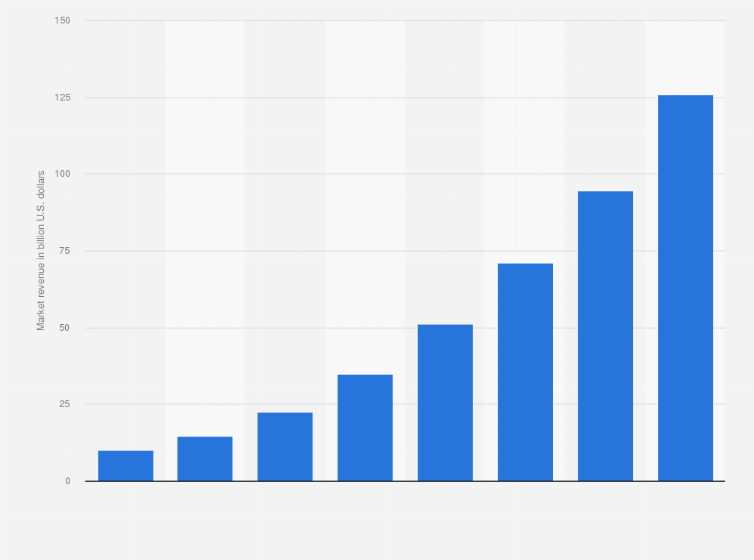

Did you know that by 2025, the AI market is expected to reach $126 billion? Source: Statista

With such a booming industry, staying ahead of the curve is paramount. But the questions are:

- How can RAG elevate your AI game?

- Will it revolutionize your business or research?

- What are its Benefits?

Well, this comprehensive guide will take you from a novice to an expert, unraveling the intricacies of RAG. By the end, you’ll not only understand its operational mechanics but also how to implement it in real-world scenarios.

In This Post

- What is RAG AI (Retrieval-Augmented Generation)?

- How Does RAG Improve LLMs?

- The Science Behind RAG

- How Does RAG Model Work – A Step-by-Step Process

- The Role of LLM In RAG

- The Significance of External Data in RAG

- Benefits of Using RAG in AI

- Applications and Use-Cases

- Future Prospects of RAG and LLM

- A Practical Guide to Implementing a Basic RAG Model

- Conclusion

What is RAG AI (Retrieval-Augmented Generation)?

Retrieval-Augmented Generation, or RAG, is a smart technology that combines two powerful tools in artificial intelligence: information retrieval and text creation. Imagine it as a super-smart librarian who not only finds the book you need but also summarizes it for you in a perfect manner.

This technology enhances the performance of Large Language Models (LLMs), like ChatGPT, making them even smarter and more reliable.

Now, you may wonder how this emerging tech improvises Large Language Models.

Am I right???

Well, Don’t Worry…. Scroll your mouse to know the answer to this question.

How Does RAG Improve LLMs?

Enhancing Knowledge with Up-to-Date Information

LLMs like ChatGPT are knowledgeable, but they’re often limited to what they were trained on, which can quickly become outdated. RAG tackles this by connecting the LLM to a wealth of current information from external sources. This means when you ask about the latest trends or events, RAG ensures the LLM’s responses are grounded in the most recent data, making them more relevant and valuable.

Reducing Misinformation and Hallucinations

One of the challenges with LLMs is that they can sometimes make confident claims that seem credible but are false—this is known as hallucination. RAG reduces this risk by anchoring the LLM’s responses in factual data retrieved from reliable sources. So, instead of guessing or making things up, the LLM uses RAG to provide answers based on real, verifiable information.

Improving Domain-Specific Responses

RAG significantly boosts the accuracy of LLMs when dealing with specialized knowledge or industry-specific jargon. By retrieving information from domain-relevant databases, RAG enables LLMs to understand and respond to queries with a level of expertise that would be impossible using only their base training data.

Streamlining Application Development

For developers, using RAG within applications like chatbots or customer service tools means they can deliver a smarter AI that understands and interacts with users more effectively.

Simplifying Complex Implementations

Finally, RAG simplifies the complex process of integrating external data into LLMs. Developers can use APIs and retrieval tools to connect their LLMs to the necessary data sources, making it easier to build powerful AI applications that can understand and generate responses based on a wide array of real-time information.

The Science Behind RAG

Drawing from the insights of the research paper “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks,” the science behind RAG can be distilled into two key models:

Retrieval-Based Models

Retrieval-based models are adept at sifting through vast amounts of data to find the most relevant information. They are the backbone of search engines and recommendation systems.

Generative Models

Generative models, like GPT-3, excel at creating new content based on the data they’ve been trained on. They can write essays, generate code, and even compose music.

The Fusion of Both

RAG is the harmonious combination of these two. It uses retrieval-based models to fetch relevant data and generative models to create coherent and contextually appropriate responses.

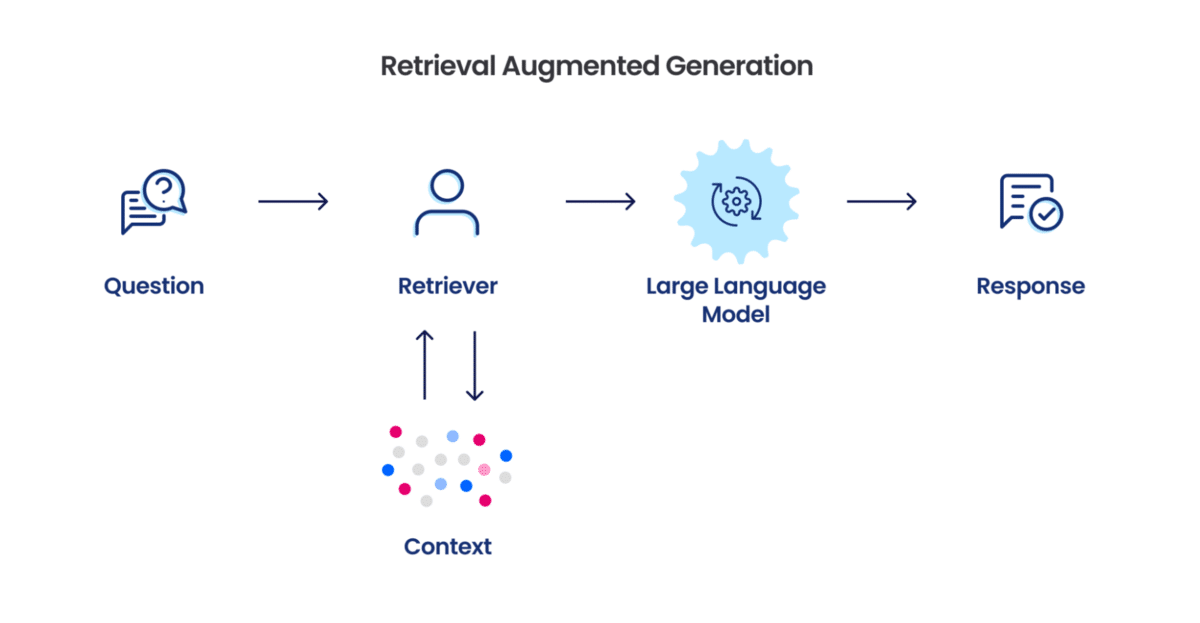

How Does RAG Model Work – A Step-by-Step Process

The working of Retrieval-Augmented Generation involves a five-step process:

- Knowledge Retrieval: RAG models build knowledge repositories by collecting and storing vast amounts of text data from the internet, books, or other sources. This knowledge is typically in the form of structured data or unstructured text.

- Query Processing: When a user poses a question or query, the RAG model first processes the input to understand its context and intent. It identifies keywords and relevant information within the query.

- Retrieval: The model uses its knowledge repository to retrieve relevant information or passages that are likely to contain answers to the user’s query. This retrieval step is critical for providing accurate and contextually relevant responses.

- Augmentation: The retrieved information is then used to augment the model’s understanding of the query. This step helps the model refine its response generation by incorporating real-world knowledge.

- Generation: Finally, the RAG model generates a response based on the retrieved and augmented information. This response can be in the form of a natural language text or structured data, depending on the task.

RAG is particularly effective in situations where the answers may not be present in a pre-defined dataset but require real-time information from the web.

Retrieval Augmented Generation Process (Image source – Snorkel)

It bridges the gap between traditional generative models and information retrieval systems, allowing AI systems to provide more up-to-date and contextually relevant responses to user queries.

The Role of LLM In RAG

Large Language Models (LLMs) are integral to the Retrieval-Augmented Generation (RAG) framework, enhancing AI’s response quality with deep language understanding and contextual awareness. LLMs interpret queries, align retrieved information to context, and generate dynamic, human-like responses. They learn and adapt, improving the RAG system’s query handling over time.

Basically, Large Language Models (LLMs) help make the RAG systems better at talking and giving information, making AI smarter and better at sharing knowledge.

The Significance of External Data in RAG

External data is crucial for RAG systems as it allows them to access a wide array of information beyond their initial training data.

This enables the generation of responses that are current and relevant to real-world events and information.

Types of Data Sources

RAG systems can tap into various data sources from structured databases to unstructured text documents.

Real-time Databases and APIs

These provide up-to-the-minute data, which is essential for RAG systems to deliver timely and accurate information.

Benefits of Using RAG in AI

Retrieval-Augmented Generation (RAG) systems bring a suite of benefits to the field of Artificial Intelligence (AI), particularly in how information is processed and presented. Here are some of the key advantages:

- Enhanced Information Retrieval: This is the first benefit of RAG systems as it combines the generative capabilities of language models with the vast storage of external databases. This synergy allows for the retrieval of precise information that is relevant to the user’s query, leading to more accurate and informative responses.

- Up-to-date Content: The next benefit in the list is getting up-to-date content as by leveraging real-time databases and APIs, RAG systems can provide the most current information available. This is particularly beneficial for topics where new data is constantly emerging, such as news, stock prices, or weather forecasts.

- Contextual Understanding: Moving further, RAG systems are adept at understanding the context of queries, which enables them to retrieve and generate content that is not just factually correct but also contextually appropriate. This is crucial for maintaining a natural and relevant dialogue with users.

- Scalability: The next advantage in the list is scalability. As the amount of available information grows, RAG systems can scale their retrieval processes to handle the increased data. This scalability ensures that the quality of the generated content does not diminish over time.

- Learning and Adaptation: RAG systems can learn from each interaction, which allows them to improve over time. They adapt to new information and user feedback, refining their responses for future queries.

- Efficiency: By automating the retrieval and generation of content, RAG systems can significantly reduce the time and effort required to produce high-quality responses. This efficiency is invaluable in applications such as customer support and content creation.

- Personalization: RAG systems can tailor their responses to the individual user by considering past interactions and preferences. This personalization leads to a more engaging and satisfying user experience.

- Multilingual and Cross-Domain Utility: RAG systems are not limited to a single language or domain. They can be trained to operate in multiple languages and across various fields, making them versatile tools for global and cross-disciplinary applications.

- Innovation: By providing a more sophisticated approach to generating responses, RAG systems open up new possibilities for innovative applications in AI. They enable more complex and creative uses of AI in areas such as interactive storytelling, educational tools, and more.

Applications and Use-Cases

Enhancing Conversational AI with RAG (Chatbots & AI Assistants)

In the burgeoning field of conversational AI, RAG-powered systems are redefining user interactions. These advanced systems are adept at parsing through extensive databases to provide answers that are not only relevant but also intricately detailed, fostering a more dynamic and engaging dialogue with users.

Revolutionizing Educational Resources

RAG technology is transforming educational platforms, granting students the ability to query a vast expanse of scholarly content. This access to detailed explanations and contextual information drawn from authoritative texts enhances the learning experience, paving the way for a more profound grasp of complex subjects.

Streamlining Legal Operations

For legal practitioners, RAG models are proving to be invaluable by optimizing the review and research phases of legal work. They adeptly condense and clarify legal statutes, case law, and extensive documentation, thereby enhancing efficiency and precision in legal operations.

Advancing Medical Diagnostics

In the realm of healthcare, RAG models are becoming indispensable tools for medical professionals. They offer a portal to the most recent medical studies and guidelines, which is instrumental in supporting accurate diagnoses and formulating effective treatment strategies.

Refining Language Translation Services

RAG’s application in language translation is marked by its ability to incorporate contextual understanding, thereby achieving translations that are not only linguistically accurate but also culturally and technically appropriate, especially in specialized sectors.

Innovating Content Creation

Content creators can utilize RAG to generate ideas and draft content that is both original and informed by a vast array of sources. This can lead to more creative and informed writing, particularly in journalism and creative industries.

Optimizing Search Engine Efficiency

Search engines can integrate RAG to improve the relevance and accuracy of search results. By accessing and understanding a broader context, RAG can help deliver search results that better match user intent and inquiries.

Enhancing Customer Support Systems

Customer support can be revolutionized with RAG by providing representatives with immediate access to relevant information, reducing response times, and increasing the accuracy of support provided to customers.

Future Prospects of RAG and LLM

Emerging advancements in RAG will refine how these models retrieve information, aiming for unparalleled precision and speed. This will ensure LLMs can tap into relevant data swiftly.

RAG’s integration with multimodal AI is set to revolutionize how it interacts with various data types, enhancing the richness and contextuality of responses. This will pave the way for novel applications across different sectors. Moreover, industry-specific RAG applications are on the horizon, particularly in healthcare, law, finance, and education, where they will perform specialized tasks with greater efficiency.

However, research in RAG will continue to break new ground, driving the creation of more sophisticated, accurate, and flexible models.

LLMs will evolve to include advanced retrieval capabilities as a standard feature, enabling them to access external knowledge more effectively and provide more context-aware responses.

A Practical Guide to Implementing a Basic RAG Model

Embarking on the journey to set up a simple RAG model involves a series of steps that, while straightforward, require attention to detail to ensure a successful implementation.

Initial Setup:

- Environment Preparation: Begin by setting up your development environment. This typically involves installing the necessary libraries and dependencies, such as TensorFlow or PyTorch, and ensuring that your hardware is capable of handling the computational load.

- Data Gathering: Collect a dataset that your RAG model will use for training. This dataset should be relevant to the task at hand and properly annotated.

- Model Selection: Choose a pre-trained language model as the foundation for your RAG. This could be a model like BERT or GPT, which has been trained on a large corpus of text.

- Retrieval Database Setup: Create a retrieval database that contains the documents or information your RAG will pull from. This database should be indexed for efficient searching.

Model Training:

- Fine-tuning: Adjust the pre-trained model with your specific dataset, which allows the model to learn from the context and nuances of your application.

- Retrieval Mechanism Integration: Integrate the retrieval mechanism, ensuring that your model can query the retrieval database effectively during the generation process.

- Evaluation: Test the model’s performance using a separate validation set to ensure that it can retrieve and generate responses accurately.

Advanced Configurations:

- Hyperparameter Optimization: Experiment with different hyperparameters to find the optimal settings for your model. This includes learning rates, batch sizes, and the number of layers in the neural network.

- Cross-Validation: Implement cross-validation techniques to better generalize the model and prevent overfitting.

- Scalability Enhancements: Make adjustments to allow your model to scale with increased data or more complex queries, which may involve more sophisticated indexing strategies or distributed computing techniques.

- Multimodal Capabilities: If your application requires, extend the RAG to handle multimodal data by incorporating image or video processing layers.

By following this guide, you can set up a simple RAG model and then expand its capabilities through advanced configurations, tailoring it to meet the specific needs of your application.

Conclusion

In conclusion, Retrieval-Augmented Generation (RAG) is far more than a mere buzzword; it is a pivotal innovation that is redefining the AI landscape. By enhancing the precision of large language models and incorporating real-time data retrieval, RAG opens up a plethora of applications. As we navigate an era where data is king, the mastery of RAG technology stands as an important differentiator.

For those eager to harness the power of RAG without delving into the complexities of code, CodeConductor offers a seamless solution.

This AI software development platform empowers you to leverage RAG technology, enabling your applications to think, reason, and learn with unprecedented accuracy. Whether you’re looking to develop a website, E-commerce store, SAAS app, or stand-alone landing pages, CodeConductor is your gateway.

Founder CodeConductor